Robot Vision Part 1 : Camera Calibration

Extrinsic Camera Calibration for Robot Vision using OpenCV and Python

Processing any visual information requires the image from the camera to be accurate in order to avoid errors. This is very important for robots during navigation and also for rendering virtual elements over real world objects (Augmented Reality). The Source code for this project can be found here.

The image on the left shows distortion caused by a wide angled lens and its undistorted version on the right.

Why Camera Calibration ?

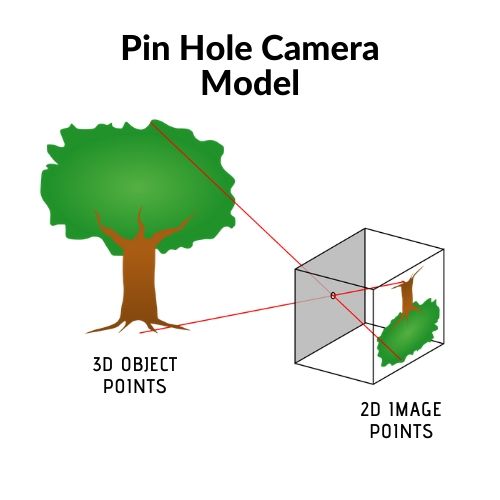

Most of the cameras in the market follow the pinhole model, in which 3D objects are projected on a 2D surface (Camera Sensor). However this projection isn’t always perfect. Thus we need to make some adjustments to the camera image.

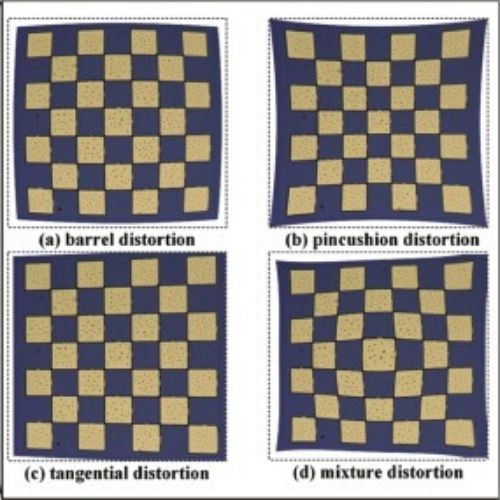

These distortions can be corrected using the Brown-Conardy model. Let be a point in the image and be the it’s radial distance from the center. Then radial correction :

tangential correction :

And corrected coordinates:

Thus finding out the five distortion coefficients will help us undistort the image.

Getting Things Ready

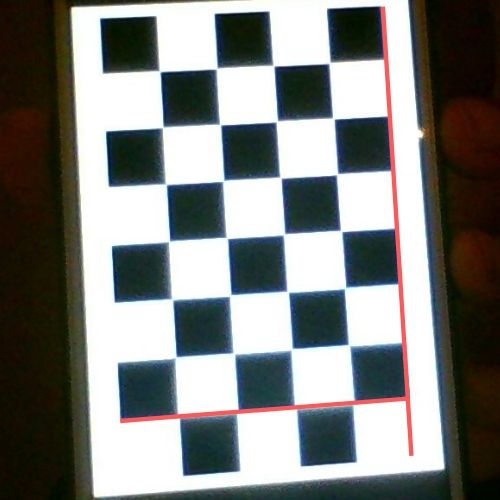

We will be using a 7x4 chessboard for this process as it is very easily available. The chessboard is displayed on my mobile phone screen, gotta go green. Take few pictures of the chessboard with your camera (At least 10). You must have OpenCV 4.1 or higher and Python3. Pickle will be required for storing our calibration data for future use.

Calibrating the Camera

For the purpose of demonstration I am using only one image. In OpenCV camera calibration can be easily done using cv2.calibrateCamera( objpoints, imgpoints, gray.shape[::-1], None, None ) which maps the 3D Object Points of the real chessboard to the 2D Image Points of the camera image and returns ret:Calibration Status(Bool), mtx: Camera Matrix (Intrinsic Parameter), dist:Distortion Coefficients, rvecs, tvecs.

So first we import the libraries and generate the 3D objpoints (Object Points) considering the chessboard to be kept stationary on the XY plane. i.e Z = 0 always.

import numpy as np

import cv2

import pickle

# Arrays to store object points and image points from all the images.

objpoints = [] # 3d point in real world space

imgpoints = [] # 2d points in image plane.

# prepare object points, like (0,0,0), (1,0,0), (2,0,0) ....,(6,5,0)

objp = np.zeros((7*4,3), np.float32)

objp[:,:2] = np.mgrid[0:7,0:4].T.reshape(-1,2)

objpoints.append(objp)Next we read in our images and find the chessboard corners using cv2.findChessboardCorners(img, (7,4), None)

img = cv2.imread(fname)

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY) #convert to grayscale

# Find the chessboard corners

found, corners = cv2.findChessboardCorners(gray, (7,4),None)Upon successful detection, found will be returned as true. The points can be further refined using cv2.cornerSubPix() and then drawn over the image with cv2.drawChessboardCorners()

# If found, add object points, image points (after refining them)

if found == True:

corners_refined = cv2.cornerSubPix(gray,corners,(11,11),(-1,-1),(cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001))

imgpoints.append(corners_refined)

# Draw and display the corners

img_corners = cv2.drawChessboardCorners(img, (7,4), corners_refined,ret)

cv2.imshow('corners',img_corners)

cv2.waitKey(0)Now we can use these points to calculate the distortion coefficients and undistort the image with cv2.undistort().

# find distortion coefficients

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(objpoints, imgpoints, img.shape[1:],None,None)

# undistort

calibrated = cv2.undistort(img, mtx, dist, None, mtx)

cv2.imshow('calibrated',calibrated)

cv2.waitKey(0)

cv2.destroyAllWindows()Let’s use pickle.dump() to store the calibration data for furthur use.

# store the data

f = open('calibrated.pickle', 'wb')

pickle.dump([dist, mtx], f)

f.close()From upcoming posts we’ll just load the distortion coefficients dist and the camera matrix mtx from calibrated.pickle.

# load the data

f = open('calibrated.pickle', 'rb')

dist, mtx = pickle.load(f)

f.close()